Now available for WordPress

Check it out

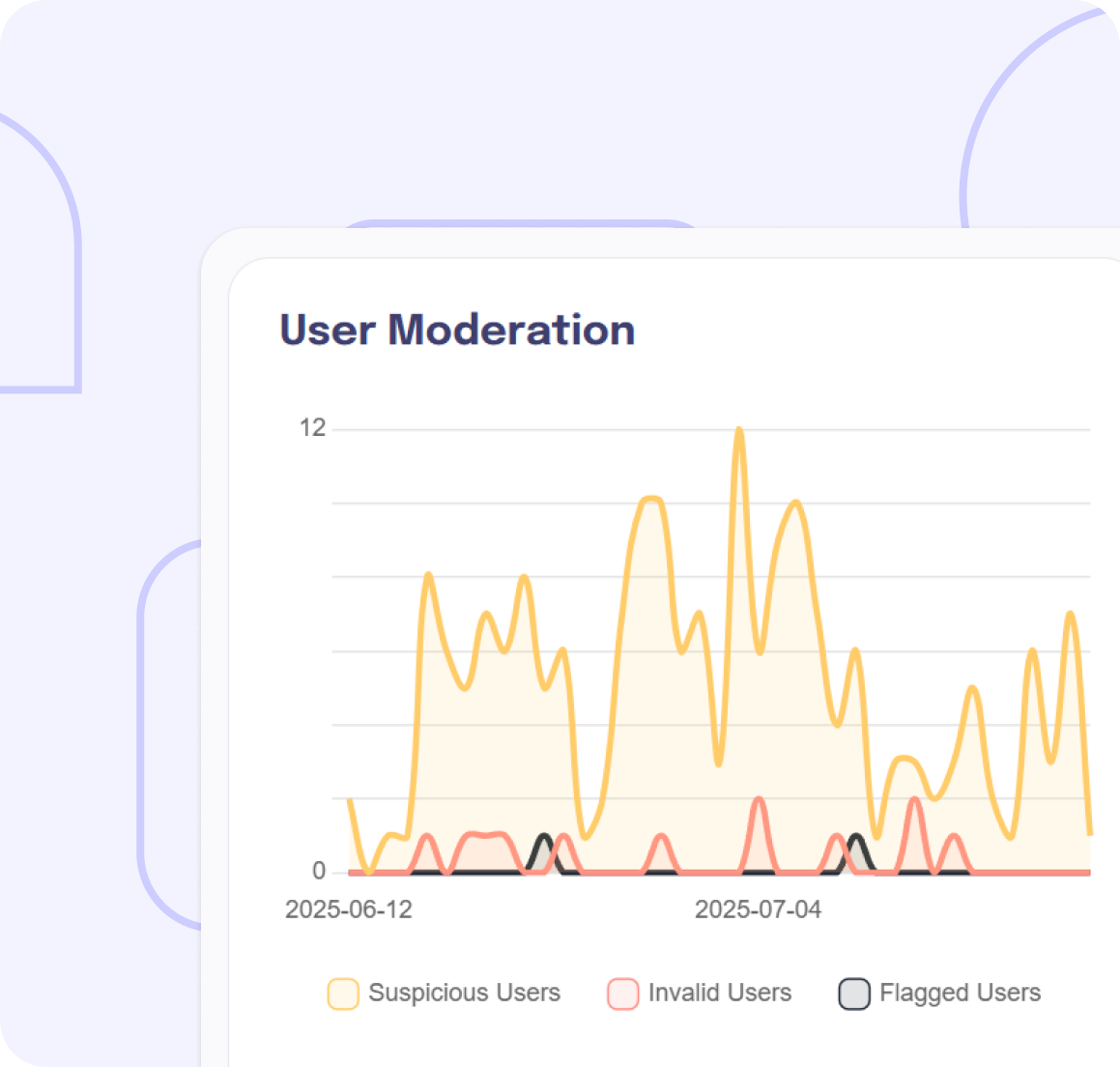

Toxic users, spam, and coordinated manipulation can drive away real audiences and damage your reputation. Trusted Accounts detects abusive behavior, flags toxic users, and helps your moderators keep conversations clean.

Flag Toxic Users

Identify and flag abusive or toxic users automatically.

Filter Bots & Duplicates

Filter bots and duplicate accounts driving fake engagement.

Stop Spam Campaigns

Detect spam campaigns and coordinated manipulation.

Protect Your Brand

Keep audience and campaign analytics accurate.

News & Publishers

Let your community grow.

Community Platforms

Stop fake accounts, spam, and poll manipulation. Keep your community real and trusted.

E‑Commerce

Prevent promo abuse, fake reviews, and account fraud. Protect customers and your revenue.

SaaS Platforms

End trial abuse, bot signups, and fake accounts. Keep analytics and resources clean.

Marketing

Cut click fraud, fake leads, and bot traffic. Optimize campaigns with real data.

Whether you run a news site, e‑commerce store, or community platform, the threats are the same. Here’s how we protect you from the most damaging ones.

„In Trusted Accounts, we see the potential to increase our verification rate and thus be able to moderate fake accounts, trolls, hate and disinformation more efficiently.”

Head of Product, DER STANDARD

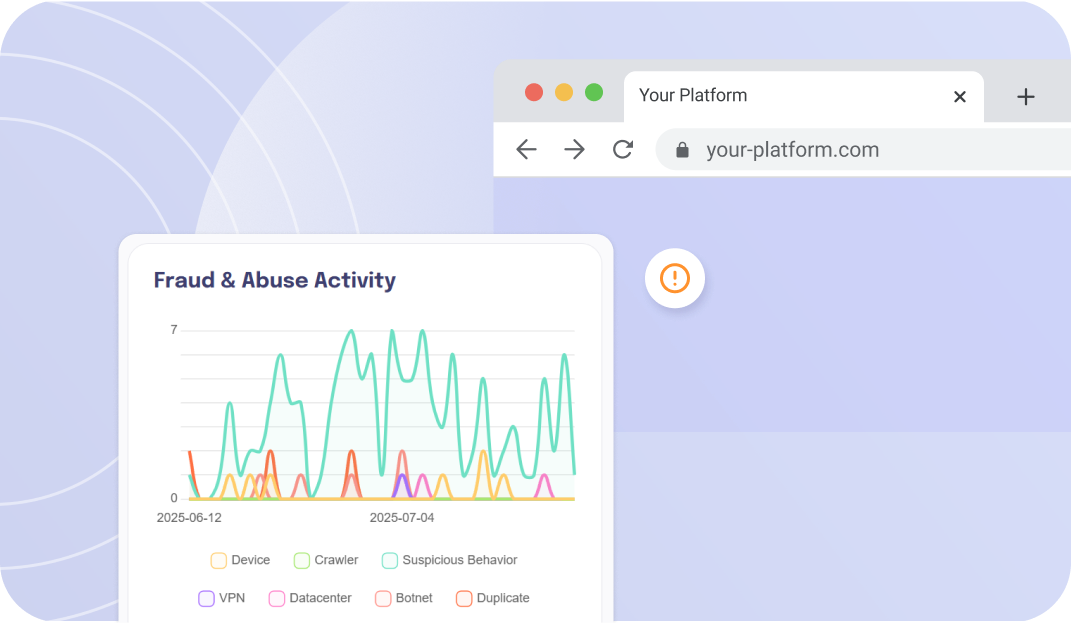

Fraud & Abuse Protect

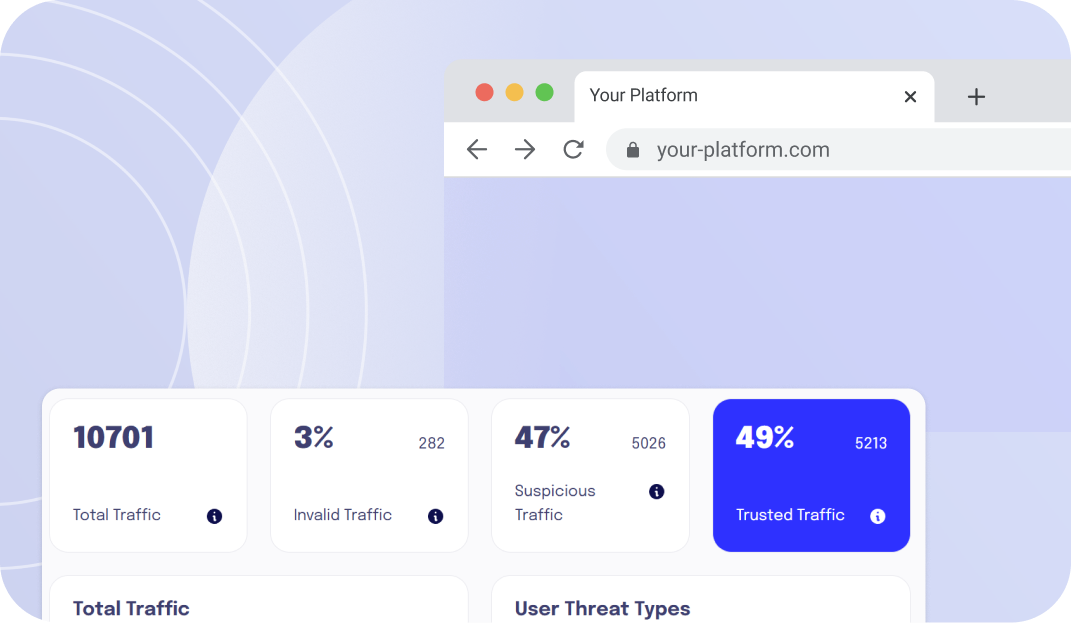

Spot fake accounts, ad fraud, survey manipulation, disinformation, and more.

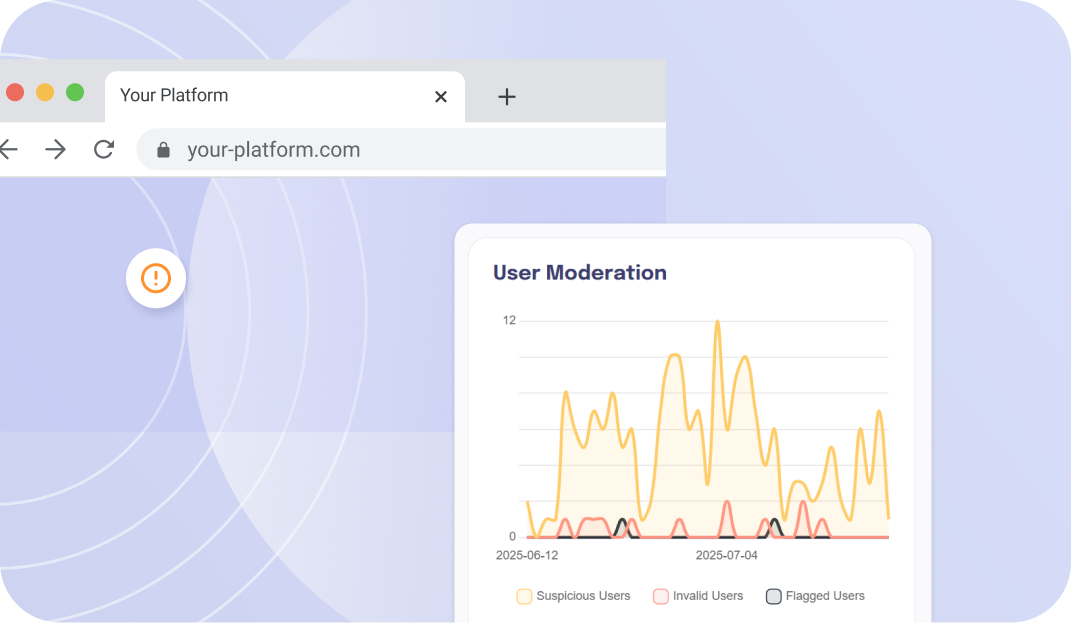

Account Protection

Silent checks for authentic users, extra checks only for suspicious users.

Stop Bots and Abusive Users: Protect Your WordPress Site with Trusted Accounts

Future-Proofing Content Moderation: Use Cases for 2024

Bot Protection - Top 7 Tools for 2024

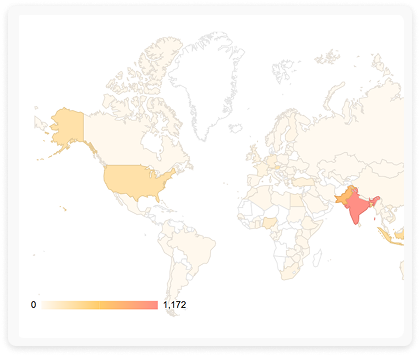

We combine behavioral signals, and user validation to identify invalid, spammy, or coordinated behavior in real time.

No — we make their jobs easier by filtering obvious abuse and surfacing only high-risk cases for review.

Yes — we detect patterns in account creation, posting, and engagement to stop manipulative behavior.

Yes — we integrate with major CMS platforms, community tools, and custom systems.

Most platforms see spam and toxic content reduced within the first week of deployment.